Decision trees offer several advantages that make them a popular choice for various machine learning tasks:

-

Interpretability:

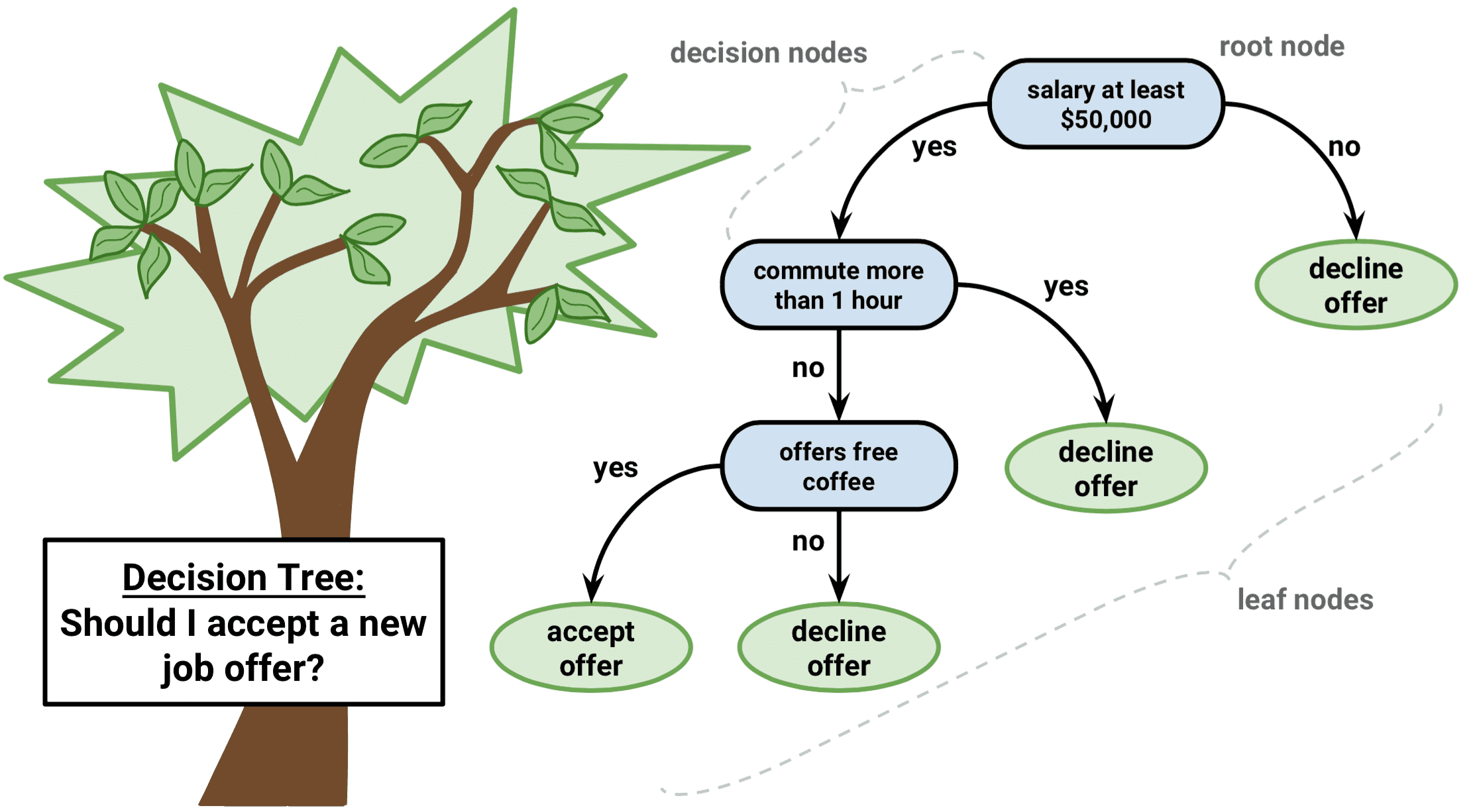

- Decision trees produce a set of simple, intuitive rules that are easy to understand and interpret. The tree structure represents a sequence of decisions based on feature values, making it transparent and explainable to stakeholders and domain experts.

-

No Data Preprocessing Required:

- Decision trees can handle both numerical and categorical data without requiring extensive preprocessing such as feature scaling or normalization. This simplifies the data preparation process and saves time and effort.

-

Handles Nonlinear Relationships:

- Decision trees can capture nonlinear relationships and interactions between features and the target variable. They recursively partition the feature space based on the most informative splits, allowing them to model complex decision boundaries effectively.

-

Handles Missing Values:

- Decision trees can handle missing values in the dataset by selecting surrogate splits or assigning the most common class for categorical variables. This robustness to missing data reduces the need for data imputation techniques.

-

Feature Importance:

- Decision trees provide a measure of feature importance, indicating which features are most influential in making predictions. This information helps identify relevant variables and understand the underlying data patterns, aiding in feature selection and model interpretation.

-

Robust to Outliers:

- Decision trees are robust to outliers in the data, as they partition the feature space based on information gain or impurity measures. Outliers may affect individual splits, but they are less likely to impact the overall model performance.

-

Efficient for Large Datasets:

- With efficient tree-building algorithms such as CART or ID3, decision trees can handle large datasets with relatively low computational overhead. Additionally, decision tree ensembles like Random Forests or Gradient Boosting Machines further improve performance while maintaining scalability.

-

Versatility:

- Decision trees can be applied to both classification and regression tasks, making them versatile across a wide range of applications. They are used in various domains, including healthcare, finance, marketing, and industrial applications.

-

Ensemble Methods:

- Decision trees can be combined into ensemble methods such as Random Forests or Gradient Boosting Machines, which aggregate multiple trees to improve predictive accuracy and generalization performance. Ensemble methods help mitigate overfitting and enhance model robustness.

-

Ease of Implementation:

- Decision trees are relatively simple to implement and visualize. Several libraries and frameworks provide implementations of decision tree algorithms, making them accessible to practitioners and researchers.

Overall, the advantages of decision trees, including interpretability, simplicity, robustness, and versatility, make them a valuable tool in the machine learning toolbox for both exploratory analysis and predictive modeling.

Thank you.