How does Cache Memory Works

Cache memory works based on the principle of locality, which refers to the tendency of a program to access a relatively small portion of its address space or data at any given time. There are two main types of locality: temporal locality and spatial locality.

-

Temporal Locality:

- Temporal locality refers to the tendency of a program to access the same memory locations repeatedly over a short period of time. Cache memory exploits temporal locality by storing recently accessed data and instructions in the cache, allowing the CPU to access them quickly without having to retrieve them from the slower main memory repeatedly.

-

Spatial Locality:

- Spatial locality refers to the tendency of a program to access memory locations that are near each other in terms of address space. Cache memory exploits spatial locality by storing contiguous blocks of memory, known as cache lines or cache blocks, rather than individual data items. When the CPU accesses a memory location, cache memory retrieves an entire cache line containing that location and stores it in the cache, anticipating that nearby memory locations will also be accessed soon.

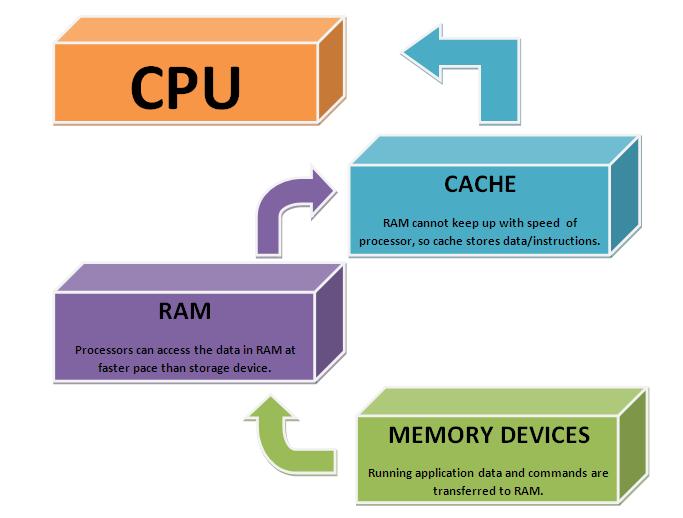

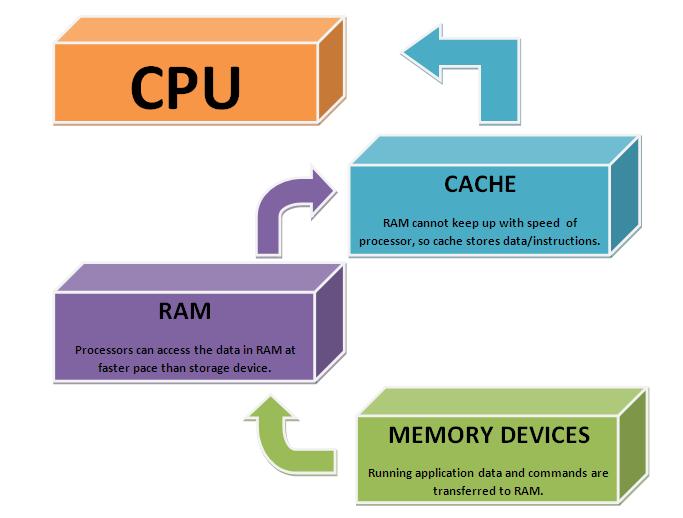

The operation of cache memory typically involves the following steps:

-

Cache Lookup:

- When the CPU requests data or instructions, the cache memory checks whether the requested information is already stored in the cache. This is known as a cache lookup.

-

Cache Hit:

- If the requested information is found in the cache (cache hit), the cache memory retrieves it and provides it to the CPU. Cache hits result in faster access times since the CPU does not have to wait for the data to be retrieved from the slower main memory.

-

Cache Miss:

- If the requested information is not found in the cache (cache miss), the CPU must retrieve it from the main memory. This process is slower and results in longer access times compared to cache hits.

-

Data Transfer:

- When cache misses occur, the cache memory retrieves the requested data or instructions from the main memory and stores them in the cache for future access. Additionally, cache memory may use prefetching techniques to anticipate future memory accesses and proactively load data into the cache before it is requested by the CPU.

-

Cache Replacement:

- Cache memory has a limited capacity, so it may need to replace existing data or instructions in the cache to make room for new entries. Cache replacement policies, such as least recently used (LRU) or random replacement, determine which cache lines are evicted when the cache is full.

By storing frequently accessed data and instructions close to the CPU and minimizing the need to access slower main memory, cache memory helps improve overall system performance and efficiency. The effectiveness of cache memory depends on factors such as cache size, cache organization, cache coherence protocols, and cache replacement policies.

Thank you,