What is ETL in Data Warehouse

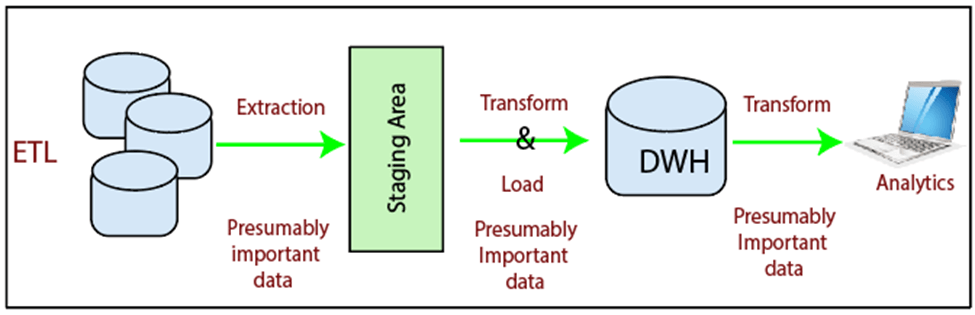

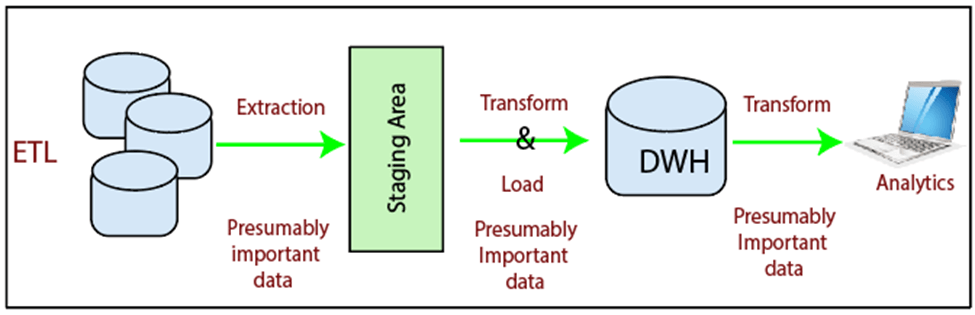

ETL stands for Extract, Transform, Load, and it refers to the process of extracting data from source systems, transforming it into a suitable format, and loading it into a target data warehouse. ETL is a critical component of the data warehousing process and plays a crucial role in ensuring that data is integrated, cleaned, and organized for effective analysis and reporting.

Here's a breakdown of each step in the ETL process:

-

Extract:

- Definition: In the extraction phase, data is extracted from various source systems, which can include databases, flat files, applications, and other repositories.

- Process:

- Data is identified and selected from source systems based on specific criteria or queries.

- Extracted data may include both full data sets and incremental changes since the last extraction.

- Data extraction can be scheduled at regular intervals to keep the data warehouse up-to-date.

-

Transform:

- Definition: Transformation involves cleaning, structuring, and converting the extracted data into a format suitable for storage and analysis in the data warehouse.

- Process:

- Data cleansing: Addressing issues such as missing values, duplicates, and inconsistencies.

- Data structuring: Organizing data into a format compatible with the data warehouse's schema, such as star schema or snowflake schema.

- Data enrichment: Adding additional data elements or calculations to enhance the dataset.

- Data validation: Ensuring data accuracy and adherence to business rules.

- Handling data quality issues to improve the overall quality of the data.

-

Load:

- Definition: Loading involves inserting the transformed data into the data warehouse, making it available for querying and analysis.

- Process:

- Loading can be done in different ways, such as bulk loading for large datasets or incremental loading for ongoing updates.

- Data is inserted into predefined tables within the data warehouse structure.

- Loading processes are often optimized for performance to ensure quick and efficient data availability.

The ETL process is crucial for maintaining the integrity and quality of data in a data warehouse. It enables organizations to integrate data from diverse sources, ensuring that it is consistent, accurate, and in a format conducive to analysis. ETL also supports the creation of a historical data repository, allowing organizations to track changes over time and analyze trends.

Various ETL tools, both commercial and open-source, are available to automate and streamline these processes. These tools provide graphical interfaces and workflows to design, schedule, and monitor ETL jobs, making it easier for organizations to manage the complexities of data integration in a data warehouse environment.

Thank you.